-

bridge building

upon the vlans that separate us

-

Capture a pcap from a router (Ubiquity EdgeX)

Remote connection Perhaps you’ve used Wireshark to capture packets on your laptop, or pc. But what if you need to troubleshoot your router? A quick way to grap a dump, is a simple ssh/tcpdump combo. It’s easy to do, and can be done remotely. First, your router must accept ssh connections for this method. In…

-

ubuntu ultra-wide full screen virt-manager

According to the post, vgamem=”32768″ may work too. https://stackoverflow.com/a/55117033/811479 On another virtual machine, running Pop!_OS, I’ve seen this work too…

-

Network Manager Wifi Tool

nmcli dev wifi list Handy for checking on channel saturation quickly on a linux laptop, or anything with wifi and NetworkManager installed. For specific fields: nmcli -f SSID,BSSID,DEVICE,BARS device wifi

-

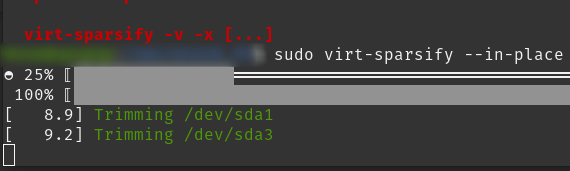

virt-sparsify –inplace

https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/7/html/virtualization_deployment_and_administration_guide/virt-sparsify Use to make a qcow2 disk remove empty space Make sure the guest is compatible, and shutdown, if the disk is for a vm. Windows should be by default. Check with this command, in cmd or PowerShell: from https://pve.proxmox.com/wiki/Shrink_Qcow2_Disk_Files#Windows_Guest_Preparation in the host The above example is a disk formatted with ntfs/fat etc.. Other helpful…

-

steps to setup zfs samba share

References: https://wiki.samba.org/index.php/File_System_Support1 https://wiki.samba.org/index.php/Setting_up_a_Share_Using_Windows_ACLs#Preparing_the_Host https://jrs-s.net/2018/08/17/zfs-tuning-cheat-sheet/ https://arstechnica.com/information-technology/2020/05/zfs-101-understanding-zfs-storage-and-performance/ Initial setup All my testing is based on ubuntu 20.10, samba4, zfs: Initial test Samba setup for basic sharing Test for acl support The output should be: HAVE_LIBACL If not, as the Samba wiki says, If no output is displayed: Samba was built using the –with-acl-support=no parameter. The Samba configure…

-

Using list comprehension to find combinations of cube dimensions that don’t add up to `n`

Input (stdin) 1 1 1 2 Expected Output [[0, 0, 0], [0, 0, 1], [0, 1, 0], [1, 0, 0], [1, 1, 1]]

-

JavaScript Notes

Hoisting JavaScript hoists function declarations and variable declarations to the top of the current scope. Variable assignments are not hoisted. Declare functions and variables at the top of your scripts, so the syntax and behavior are consistent with each other. Shadowing where you forget to put ‘var’, ‘let’, or ‘const’ in before a new variable declaration in…

-

Quick lan scan

Sometimes, you just loose track of what’s on the network. If you want to scan you lan, here’s a quick and dirty way (from a host with netcat installed. I’m using ubuntu’s BSD version ) (NOTE: Only do this on networks you own/are authorized to. You can get in trouble with this one) https://nmap.org/book/legal-issues.html https://www.isecom.org/research.html…

-

Passing integers to components

remember: when passing an integer, wrap it in curly braces, like this, where I’m passing the integer 10 into the Items component.